Despite the fact that google has said that sites the use of https may be given a minor rankings boost, many web sites have skilled losses because of mistaken implementation. Columnist tony edward discusses commonplace issues and how to fix them. Google has been making the push for sites to move to HTTPS, and many folks have already started to include this in their SEO strategy. Recently at SMX Advanced, Gary Illyes from Google said that34 percent of the Google search results are HTTPS. That’s more than I personally expected, but it’s a good sign, as more sites are becoming secured.

Google has been making the push for sites to move to HTTPS, and many folks have already started to include this in their SEO strategy. Recently at SMX Advanced, Gary Illyes from Google said that34 percent of the Google search results are HTTPS. That’s more than I personally expected, but it’s a good sign, as more sites are becoming secured.

Google has been making the push for sites to move to HTTPS, and many folks have already started to include this in their SEO strategy. Recently at SMX Advanced, Gary Illyes from Google said that34 percent of the Google search results are HTTPS. That’s more than I personally expected, but it’s a good sign, as more sites are becoming secured.

Google has been making the push for sites to move to HTTPS, and many folks have already started to include this in their SEO strategy. Recently at SMX Advanced, Gary Illyes from Google said that34 percent of the Google search results are HTTPS. That’s more than I personally expected, but it’s a good sign, as more sites are becoming secured.

However, more and more, I’m noticing a lot of sites have migrated to HTTPS but have not done it correctly and may be losing out on the HTTPS ranking boost. Some have also created more problems on their sites by not migrating correctly.

HTTPS post-migration issues

One of the common issues I noticed after a site has migrated to HTTPS is that they do not set the HTTPS site version as the preferred one and still have the HTTP version floating around. Google back in December 2015 said in scenarios like this, they would index the HTTPS by default.

However, the following problems still exist by having two site versions live:

- Duplicate content

- Link dilution

- Waste of search engine crawl budget

Duplicate content

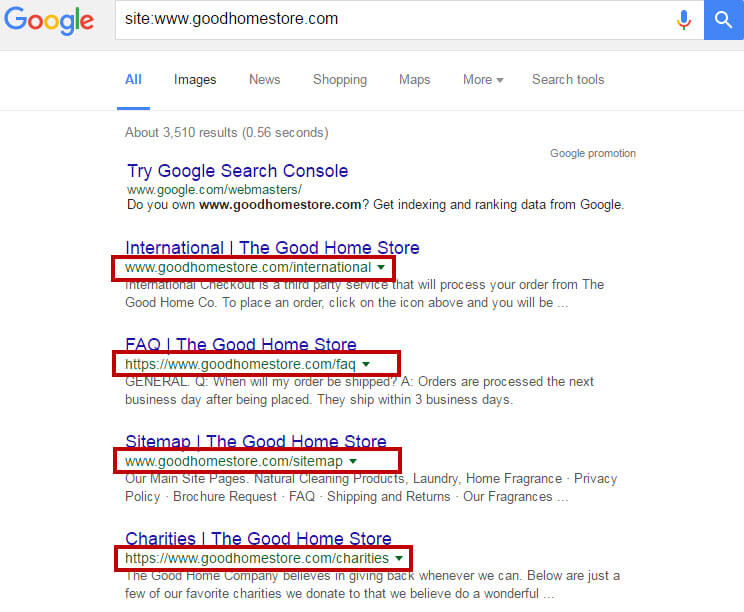

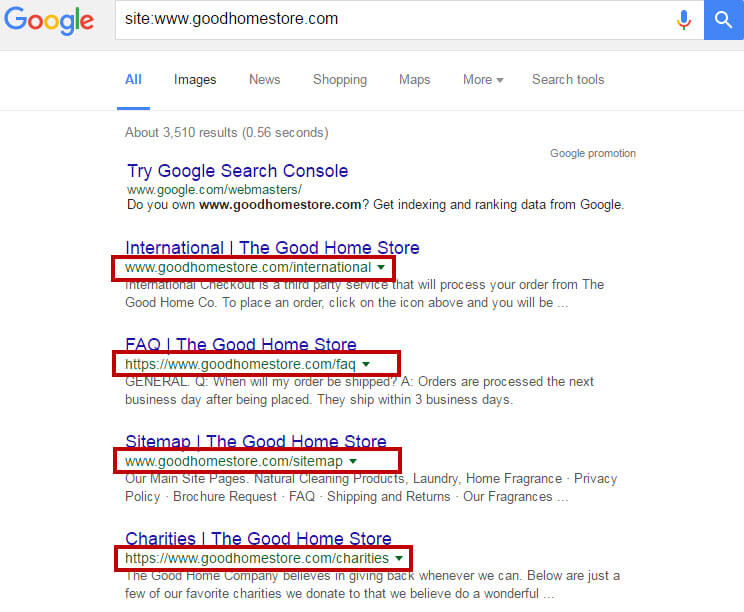

If canonical tags are not leveraged, Google sees two site versions live, which is considered duplicate content. For example, the following site has both HTTPS and HTTP versions live and is not leveraging canonical tags.

Because of this incorrect setup, we see both HTTP and HTTPS site versions are indexed.

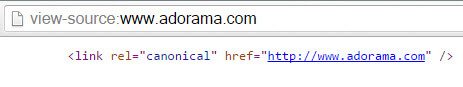

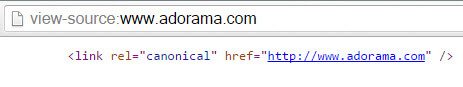

I’ve also seen sites that have canonical tags in place, but the setup is incorrect. For example, Adorama.com has both HTTP and HTTPS versions live — and both versions self-canonicalize. This does not eliminate the duplicate content issue.

http://www.adorama.com/

https://www.adorama.com/

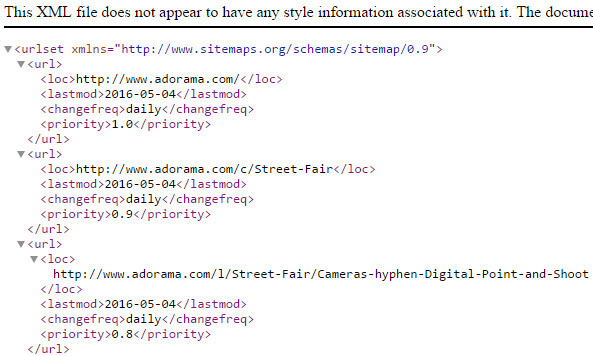

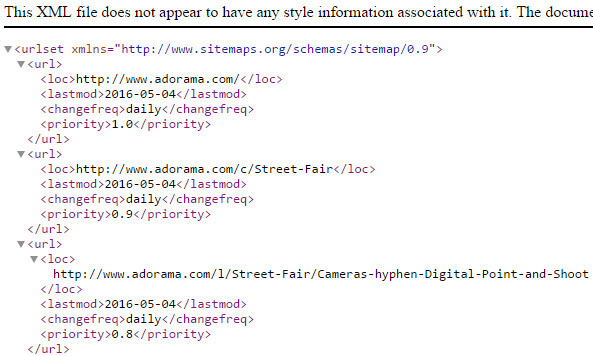

Adorama’s XML sitemap highlights the HTTP URLs instead of the HTTPS versions.

Link dilution

Having both the HTTPS and HTTP versions live, even with canonical tags in place, can cause link dilution. What will happen is that different users will come across both site versions, sharing and linking to them respectively. So social signals and external link equity can get split into two URLs instead of one.

Waste of search engine crawl budget

If canonical tags are not leveraged, and both versions are live, the search engines will end up crawling both, which will waste crawl budget. Instead of crawling just one preferred version, the search engines have to do double work. This can be problematic for very large sites.

The ideal setup to address the issues above is to have the HTTP version URLs 301 redirect to the HTTPS versions sitewide. This will eliminate the duplication, link dilution and waste of crawl budget. Here is an example:

Be sure not to use 302 redirects, which are temporary redirects. Here is an example of a site that is doing this. They are actually 302 redirecting the HTTPS to the HTTP. It should be that the HTTP 301 redirects to the HTTPS.

Here is a list of the best practices for a correct HTTPS setup to avoid SEO issues:

- Ensure your HTTPS site version is added in Google Search Console and Bing Webmaster Tools. In Google Search Console, add both the www and non-www versions. Set your preferred domain under the HTTPS versions.

- 301 redirect HTTP URL versions to their HTTPS versions sitewide.

- Ensure all internal links point to the HTTPS version URLs sitewide.

- Ensure canonical tags point to the HTTPS URL versions.

- Ensure your XML Sitemap includes the HTTPS URL versions.

- Ensure all external links to your site that are under your control, such as social profiles, point to the HTTPS URL versions.